Types of Bias in Research #1: Generalisation of Findings

Address different kinds of researcher bias in your literature review, to query the generalisation of findings in the papers you include

Categories of Researcher Bias

Listening to a ytube talk of brokenscience.org, I was directed to a peer-reviewed article of Trafimow (2021). A wonderful share. This paper helps you to critique generalisation of results by focusing on categories of potential researcher bias.

How often are you at a loss as to "what to write about" or "where research gap"?

Thankfully, journal articles (for the most part) provide a rich vein of active thinking (critique) tips and ticks. In this context: Consideration of generalisation of results.

Most interesting to reflect on was that results are not the only element of a research study that can be generalised. Trafimow (2021) points out that a research aim may be to generalise the theory guiding the study design, rather than the study findings per se. To determine what is being generalised requires considerations of sets of assumptions. Researcher bias.

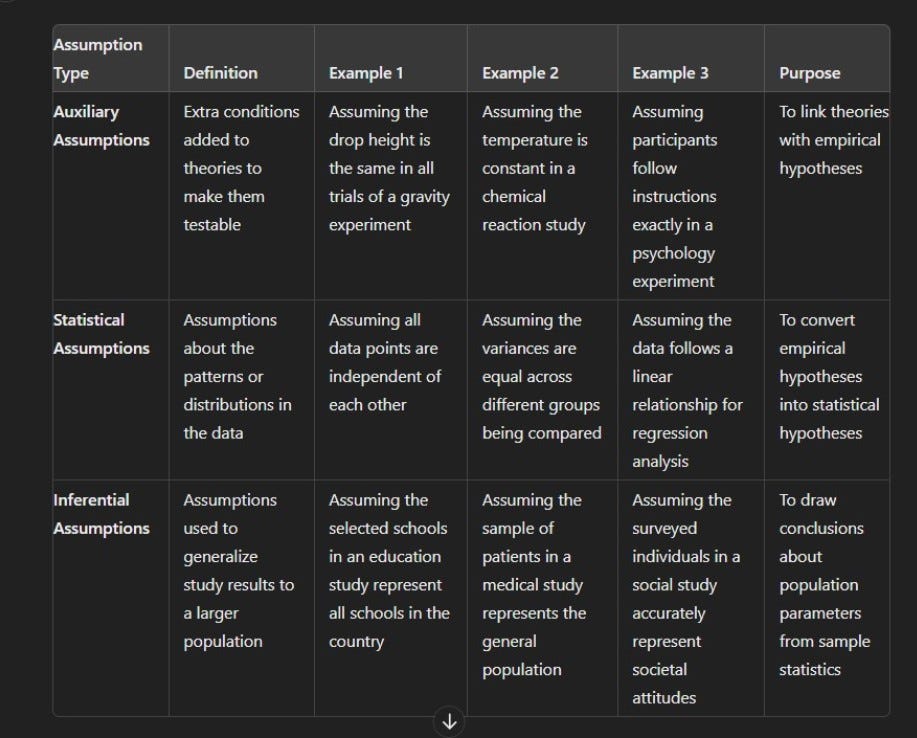

The first part of Trafimow's (2021) paper unpacks these assumptions, with detailed examples. Here is a table summarising Trafimow's assumption types which influence findings generalisation (with additional examples to his).

A theory may be imbued with auxiliary assumptions (educated [or not] guesses which are not part of the theory per se). As an empirical hypothesis contains concepts, there can be a number of ways the researcher measures any given concept.

The statistical hypothesis provides a concrete representation of the concept. Such as operationalising the concept 'higher' to be the 'mean score on a scale above the value of X).

Alternatively, the researcher could operationalise the concept to be the 'median score on a scale above the number X).

Choices.

How a researcher specifies the statistical representation of a concept is arbitrary. This is helpful, as more than one statistical hypothesis can be generated to test the empirical hypothesis.

Bias as a Research Gap

Trafimow (2021) identifies a gap that perhaps you, like me, have not considered. How does a researcher make the choice to bridge empirical and statistical hypotheses? Assumptions must be made, and again researcher bias comes into play. This is not 'bad', simply something to think about when evaluating a generalisation of a research finding.

Pic: greek food ta mystika (publicdomainpictures.net)

The second part of the article unpacks inferential hypotheses and accompanying assumptions. A good argument is made for ensuing a p-value hypothesis test. However, as this is a topic I am still learning to wrap my mind around, I'll leave it for a future post.

Anyways, I thought to myself, what a useful article for developing my and others active critical thinking skills for a journal critique, an essay, a research report, or thesis implications section.

It was not an easy read at times. Though written in a simple and straightforward manner, with a visual model included ... it took me a few days to dip into parts, and re-reread others. Well worth the effort though.

What strategies do you think psychology researchers could use to minimise the four types of bias Trafimow (2021) has identified?

Light & Life~ Charmayne

References

Trafimow, D. (2021). Generalizing across auxiliary, statistical, and inferential

assumptions. Journal for the Theory of Social Behaviour, 52(1), 37–48 https://doi.org/10.1111/jtsb.12296

Great article. I remember as a young naive man when I started to endeavor to increase my education. I truly see now how the mainstream media (left and right) uses fallacies of distraction to manipulate the mind of their audiences to brainwash their agenda.... I made a code listing - same as we used to Army to standardize and recognize reporting of enemy activity - when in the battle of the common day. It helps me decipher thru, burn up the chaff, and understand. Especially when they use these distractive techniques, but if we just "follow the money trail", we can see the real deal.

Fallacy Codes:

•A2R – Appeal to Ridicule: Mocking another’s perspective & claims.

•A2A – Appeal to Authority: Referencing the argument citing an “expert” to provide“validation.”•A2E – Appeal to Emotion: If it feels good; therefore, it must be true; utilizing scare tactics to impede or illicit action.

•AP – Attack the Person: Distract from the subject & redirect the argument to the person.

•CQ – Complex Question: Presenting two (2) or more questions & allowing only one (1) answer.

•FE – False Effect: Using false correlation of cause & effect from one (1) issue to another. Assuming issue one (1) is wrong, therefore, so also is issue two (2) is wrong.

•INS – Insignificance: Inflating impact or importance of a minor cause.

•FD – False Dilemma: Having two (2) or more choices & selecting one (1) choice means rejecting the other. Two (2) choices are presented when there are actually more options.

•PW – Poisoning the Well: Preliminary attacking/discrediting the source before they speak.

•RH – Red Herring: Distracting the subject w/a topic which is irrelevant.

•RadA – Reduction ad Absurdum: A false scenario is ridiculous; therefore, the alternative is true.

•SS – Slippery Slope: If this, then that. Acceptance of 1 argument points to or leads to another argument.

•FI – From Ignorance: because something is not known to be true, it is assumed to be false.•REP – Repetition: Using repetition to advocate perception building.

•SM – Strawman: Attack a weak argument used by the other person.

•SoS – Style Over Substance: An attractive presentation makes it more right.